IoU Loss

突出前景

def _iou(pred, target):

1

2

3

4

5

6

7

8

9

10

| b = pred.shape[0]

IoU = 0.0

for i in range(0,b):

Iand1 = torch.sum(target[i,:,:,:]*pred[i,:,:,:])

Ior1 = torch.sum(target[i,:,:,:]) + torch.sum(pred[i,:,:,:])-Iand1

IoU1 = Iand1/Ior1

IoU = IoU + (1-IoU1)

return IoU/b

|

EGNet中的边缘loss

Paper:http://mftp.mmcheng.net/Papers/19ICCV_EGNetSOD.pdf

GitHub:https://github.com/JXingZhao/EGNet/

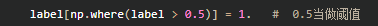

在EGNet中,是把阈值为0.5的二值化label扔进边缘损失函数中

计算正常的loss部分是用0为阈值的二值化label

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

| def load_edge_label(im):

"""

pixels > 0.5 -> 1

Load label image as 1 x height x width integer array of label indices.

The leading singleton dimension is required by the loss.

"""

label = np.array(im, dtype=np.float32)

if len(label.shape) == 3:

label = label[:,:,0]

label = label / 255.

label[np.where(label > 0.5)] = 1.

label = label[np.newaxis, ...]

return label

def EGnet_edg(d,labels_v):

target=load_edge_label(labels_v)

pos = torch.eq(target, 1).float()

neg = torch.eq(target, 0).float()

num_pos = torch.sum(pos)

num_neg = torch.sum(neg)

num_total = num_pos + num_neg

alpha = num_neg / num_total

beta = 1.1 * num_pos / num_total

weights = alpha * pos + beta * neg

return F.binary_cross_entropy_with_logits(d, target, weights, reduction=None)

|

论文中是把label二值化的部分加在dataset部分,我想直接通过后处理来实现,但是是一些奇奇怪怪的报错。。。

下面贴上原本的load_edge_label部分:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| def load_edge_label(pah):

"""

pixels > 0.5 -> 1

Load label image as 1 x height x width integer array of label indices.

The leading singleton dimension is required by the loss.

"""

if not os.path.exists(pah):

print('File Not Exists')

im = Image.open(pah)

label = np.array(im, dtype=np.float32)

if len(label.shape) == 3:

label = label[:,:,0]

label = label / 255.

label[np.where(label > 0.5)] = 1.

label = label[np.newaxis, ...]

return label

|

然后在读取dataload的时候读取一下处理后的edge_label,扔到EGnet_edg里面去算就好了

该论文在算loss的时候对loss进行大小的调整

nAveGrad=10

1

| sal_loss = (sum(sal_loss1) + sum(sal_loss2)) / (nAveGrad * self.config.batch_size)

|